Single Shot Multibox Detector (SSD) on keras 1.2.2 and keras 2

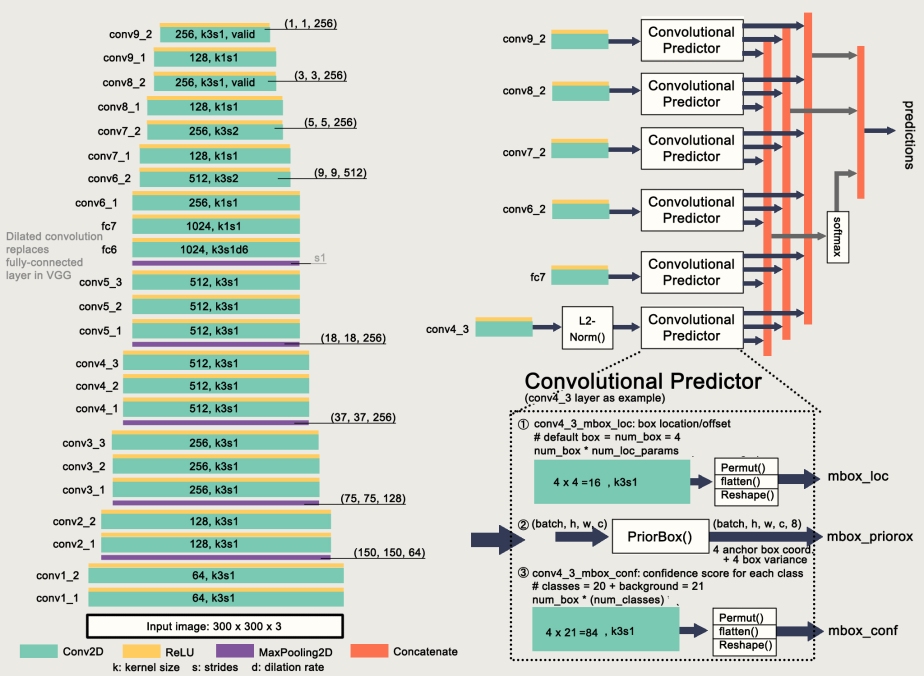

SSD is a deep neural network that achieve 75.1% mAP on VOC2007 that outperform Faster R-CNN while having high FPS. (arxiv paper)

Mask-RCNN keras implementation from matterport’s github

Github repo. here

ssd_download_essentials.ipynb: This notebook runs shell command that download code and model weights file, pip install moviepy package and etc.

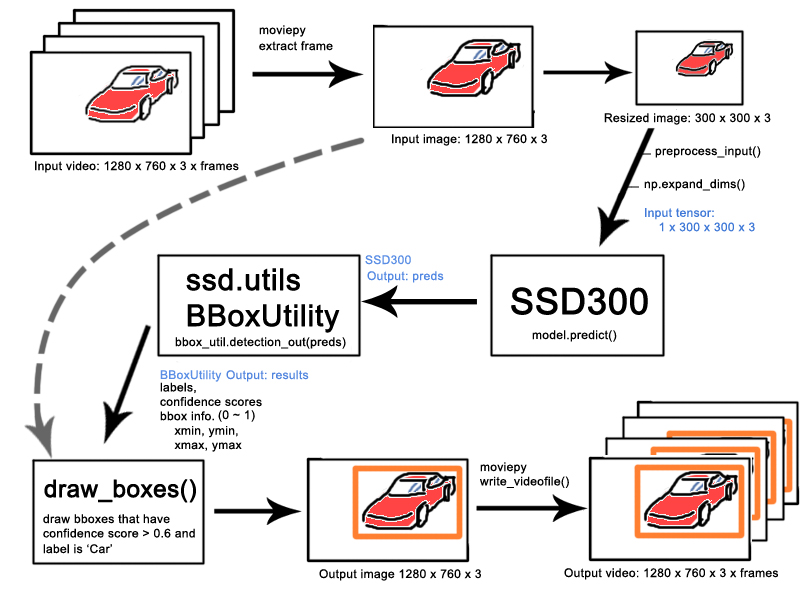

SSD_car_detection.ipynb: This notebook is based on SSD.ipynb and slightly modified to perform vehicle/lane detection on project_video.mp4

Mask_RCNN_download_essentials.ipynb: This notebook runs shell command that git clone code , download model weights file and pip install packages and etc.

Mask_RCNN_demo_car_detection.ipynb: Do Mask-RCNN inference on project_video.mp4.

Result using SSD:

Notes:

Notes:

with GPU (K80), I had about 12 frames per sec. processing the video.

w/o GPU, it was 0.1 frames per sec.

I did not train the model on the car images provided by udacity course. Instead, I use only weights file in the ssd_keras github above, which is probably trained on VOC2007.

Fancier version with lane detection and smoothed bounding boxes is shown below (full video). However it has terrible ONE FPS, caused by the non-optimized lane detection algo..

Comprehensive explanation of SSD can be found in the following links. (The first slide is especially helpful.)

References:

1. SSD: Single Shot MultiBox Detector (deepsystem.io slide)

2. Keras implementation by pierluigiferrari

3. Keras implementation by rykov8

4. CNN目标检测与分割(三):SSD详解 (Chinese)

5. SSD(single shot multibox detector)算法及Caffe代码详解[转] (Chinese)

Mask-RCNN (updated 7 Nov., 2017):

Matterport released a Mask-RCNN keras implementation with pre-trained weights on COCO dataset. I quickly ran it on the video for car detection. Here’s the result (full video):

Confidence score threshold was set to 0.6. Threshold 0.65 would gave less false positives but more missing boxes (discontinuous box on the same car). The video shows impressive segmentation result and stable bounding box.

The input resolution is 1024 x 1024, thus a lower FPS is expected. On the contrary, high input resolution benefits segmentation result (this is what we saw in top rankers’ solution of Kaggle Carvana competition).

FPS: 1.5 (on AWS EC2 p2.xlarge.)

Comparisons:

1. HOG features + Linear SVC + sliding window, which seems to be the default approach in udacity course? (source youtube video)

FPS: about 2.5 (according to this jupyter notebook)

FPS: about 2.5 (according to this jupyter notebook)

2. Deep learning + heap map approach by Marcus Erbar from this youtube video. Trained on car images provided by udacity.

Obviusly udacity training data worsen the model prediction. The bounding box does not fit to the entire car, it rather tends to fit on the back/side views. On the contrary, the SSD model predict better result because VOC2007 provides more diverse and high quality car images.

Obviusly udacity training data worsen the model prediction. The bounding box does not fit to the entire car, it rather tends to fit on the back/side views. On the contrary, the SSD model predict better result because VOC2007 provides more diverse and high quality car images.

FPS: 8 according to its github repo..

Other approaches on youtube:

3. YOLO

In this video, the YOLO model is more sensitive than SSD that it constantly detecting cars at opposite lane (possible result of lower confidence threshold). On the other hand, size of bounding box is more unstable (see 0:25 ~) and sometimes shows more than one bounding box on a car (same in this video). I wonder if non-maximum suppression is applied in their implementations.

FPS: 21 (source)

4. SVM?

5. Faster-RCNN

Although keeping zigzagging, the bounding boxes in the video generally cover the entire car. I would say its relatively stable. The most impressive part is that the confidence score stays high (>0.95) even when 2 cars start overlapping. This reminds me of a blog post writing that Faster-RCNN giving better detection result than SSD on kaggle fisheries monitoring competition.

It should be mentioned that the comparisons above are not rigorous in any sense. Due to the fact that I consider neither the hyperparameters, such as input resolution and confidence threshold value, the hardware (CPU or GPU), nor the hacks like smoothing bounding box and compensation to missing bounding box. They are nontrivial to detection accuracy and FPS. The comparisons are just my observations and should not be taken seriously.

However, I would like to recommend a paper from google that gives a comprehensive investigation on detection architectures. It’s readable and inspiring.

TODO:

1. Apply Kalman filter to smooth the bounding box.

2. Combine lane and vehicle detection (DONE) terrible ONE fps on video processing.

Lane detection references:

https://github.com/sahiljuneja/Udacity-SDCND-Term-1

https://github.com/jessicayung/self-driving-car-nd

https://pdnotebook.com/image-analysis-intro-using-python-opencv-18791f4edf22

Good job, Shaoan! And thank your for your knowledge sharing!

LikeLike

Thanks!

LikeLike

Thank you for the post

How can I implement kalman filter with ssd so that if the object reach a certain location it will be counted? how bounding boxes of the SSD model are used in the kalman filter so that object is tracked?

sample codes please.

please help I will hopefully donate for the help.

thank you in response…

LikeLiked by 1 person